The metrics used to measure results can be misleading when evaluating blockchain performance. As more blockchain networks emerge, the public will need metrics that focus on clear and efficiency rather than exaggerated claims to distinguish them.

In a conversation with Beincrypto, Taraxa co-founder Steven PU explained that it is becoming increasingly difficult to accurately compare blockchain performance, as many reported metrics rely on overly optimistic assumptions than evidence-based results. To combat this wave of misrepresentation, the PU proposes a new metric called TPS/$.

Why is the industry not a reliable benchmark?

The need for clear distinctions is increasing with the number of layer 1 blockchain networks. As various developers drive blockchain speed and efficiency, it becomes essential to rely on metrics that distinguish performance.

However, the industry still lacks reliable benchmarks for real-world efficiency, relying instead on a sporadic, sentimental wave of hype-driven popularity. According to PU, misleading performance figures are currently saturating the market and obscuring true capabilities.

“Opportunists can easily harness simplified, exaggerated narratives and benefit themselves. The possible technical concepts and metrics are used to actually promote many projects such as TPS, final delay, modularity, network node counting, execution speed, execution speed, parallelization, bandwidth capabilities, EVM-compatibility, and more. beincrypto.

PU focuses on how some projects leverage TPS metrics and use them as marketing tactics to make blockchain performance sound more appealing than the actual conditions.

Examining the misleading nature of TPS

Transactions per second, more commonly known as TPS, are metrics that refer to average or persistent transactions that a blockchain network can process and finalize per second under normal operating conditions.

But it often misleadingly hypes the project and provides a distorted view of the overall performance.

“A distributed network is a complex system that needs to be considered as a whole and in the context of use cases. However, the market has the frightening habit of simplifying and selling specific metrics or aspects across a project.

PU shows that blockchain projects with extreme claims about single metrics like TPS can undermine decentralization, security, and accuracy.

“For example, consider TPS. This one metric masks many other aspects of the network. For example, how was TPS achieved? Was it sacrificed in the process? If you have one node, if you are running a WASM JIT VM, we call it a network. Assumptions like non-dispute, etc., assume that all transactions can be parallelized. After that, TPS is not a bad metric.

Taraxa co-founders have revealed the extent of these inflated metrics in a recent report.

A key contradiction between theory and real-world TPS

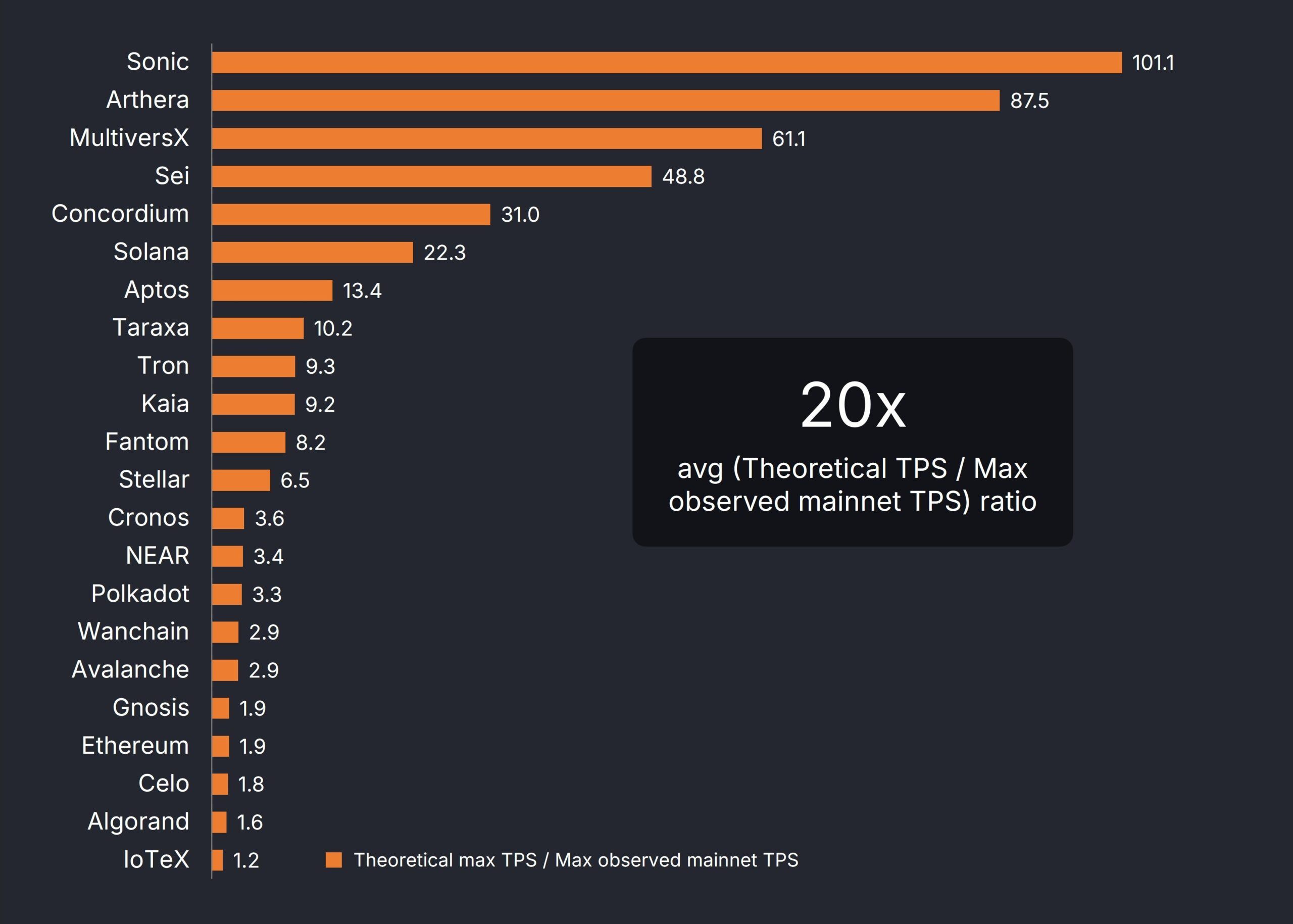

PU tried to prove his point by determining the difference between the largest historical and maximum theoretical TPS realized in the blockchain mainnet.

Of the 22 observed permissionless and single-shard networks, PUs found, on average, a 20-fold gap between theory and reality. In other words, the theoretical metric was 20 times the maximum observed mainnet TP.

The Taraxa co-founders found a 20-fold difference between the theoretical TPS and the mainnet TPS where MAX was observed. Source: Taraxa.

“Metric overestimation (like TPS) is a response to the highly speculative, narrative-driven crypto market. Everyone wants to place their projects and technology in the best possible light, so we want to run tests with a huge number of unrealistic assumptions to reach the bulging metrics.

In an attempt to counter these exaggerated metrics, PU developed his own performance scale.

Introducing TPS/$: A more balanced metric?

PU and his team were developed as follows: TPS can be realized at the mainnet/monthly $cost of a single validator node, or at the cost of a short-term TPS/$, meeting the need to improve performance metrics.

This metric also considers hardware efficiency while assessing performance based on verifiable TP achieved on the network’s live mainnet.

The significant 20x gap between theory and actual throughput persuaded the PU to exclude metrics based solely on assumptions or lab conditions. He also aimed to explain how some blockchain projects inflate performance metrics by relying on costly infrastructure.

“Published network performance claims are often inflated by very expensive hardware. This is especially true for networks with highly centralized consensus mechanisms where throughput bottlenecks move from network latency to single-machine hardware performance. Pu explained.

The PU team found the minimum validator hardware requirements for each network and determined the cost per validator node. They later estimated monthly expenses and paid particular attention to relative sizing when used to calculate dollar ratios.

“The TPS/$metric therefore tries to fix two of the misinformation in perhaps the worst category by forcing TPS performance into the mainnet and revealing inherent trade-offs for very expensive hardware,” PU added.

The PU highlighted it taking into account two simple identifiable characteristics: whether the network is not permitted and is single-shard.

Authorized and unauthorized networks: Which decentralization do you promote?

The degree of security in a blockchain will be evident by whether it operates under a permitted or unauthorized network.

Permitted blockchain refers to a closed network where access and participation is limited to predefined user groups, requiring permission to participate from a central authority or trusted group. Anyone can participate on blockchains without permission.

According to PU, the former model is at odds with the philosophy of decentralization.

“If network validation membership is controlled by a single entity, or there is only a single entity (all layer 2), then the allowed network is another good metric. This indicates whether the network is actually decentralized or not.

Networks with centralized authorities tend to be more vulnerable to certain weaknesses, so attention to these indicators proves to be important over time.

“In the long run, what we really need is a standardized attack vector battery for the L1 infrastructure. This helps to uncover the weaknesses and trade-offs of certain architectural designs. Many of today’s mainstream L1 problems are at inexplicable sacrifices in security and decentralization. They emerge organically in industry-wide standards,” PU added.

On the other hand, understanding whether a network adopts a state compared to maintaining a single shade state reveals uniformity of data management.

State – Sharding vs. Single Nation: Understanding Data Unity

In blockchain performance, latency refers to the time delay before sending a transaction to a network and confirming it and including it in a block on the block of the block. Measures how long it takes for a transaction to be processed and become a permanent part of a distributed ledger.

By identifying whether the network is adopting state or using single-shard state, you can reveal a lot about its latency efficiency.

A state shade network divides blockchain data into multiple independent parts called shards. Each shard operates somewhat independently and does not have direct real-time access to the complete state of the entire network.

In contrast, non-state shaded networks have a single shared state throughout the network. All nodes can access and process the same complete dataset in this case.

PU pointed out that state shard networks are aiming to increase storage and transaction capacity. However, in many cases, transactions must be processed across multiple independent shards, which often leads to longer final latency.

He added that many projects employing a sharding approach inflate throughput by simply replicating the network, rather than building a truly integrated and scalable architecture.

“A state shade network that does not share state is simply making unconnected copies of the network. It is obviously incorrect to argue that you can add all throughput across the copy and represent it as a single network, simply by running 1000 copies of the L1 network independently. You just make an independent copy,” PU said.

Based on his research on the efficiency of blockchain metrics, PU highlighted the need for fundamental changes in the way projects are evaluated, funded and ultimately succeeded.

What fundamental changes do blockchain evaluations require?

PU insights present a prominent alternative in the Layer-1 blockchain space where misleading performance metrics increasingly compete for attention. A reliable and effective benchmark is essential to counter these misstatements.

“You know only what you can measure, and now it’s crypto, numbers look more like hype than objective measurements. Standardized, transparent measurements allow for easy comparisons across product options, so you understand what trade-offs are making for developers and users’ use.

Adopting standardized, transparent benchmarks will encourage informed decision-making and promote real progress beyond merely advertised claims as the industry matures.